Biography

Qianyu He (何千羽) is currently a fourth-year PhD candidate at Fudan University in the School of Computer Science. Her research interests primarily focus on enhancing the fundamental reasoning and instruction following capabilities of large language models (LLMs):

- Reasoning Model: Advancing research on incentivizing and understanding LLMs’ complex reasoning abilities.

- Instruction Following: Developing advanced methods for LLMs to follow complex instructions, ensuring more reliable human-LLMs interactions, and empowering autonomous completion of complex real-world tasks.

🌟 I am currently on the job market! I am actively seeking opportunities in research and industry positions related to large language models, reasoning, and instruction following. If you are interested in collaboration or have available positions, please feel free to contact me. For more details, please refer to my Resume.

- Reasoning Model

- Instruction Following

- Dancing 💃

PhD in CS, 2021-2026 (estimated)

Fudan University

B.S. in CS, 2017-2021

Fudan University

Experience

Topics: Reasoning Model, Long Chain-of-thought.

Projects: Seed-Thinking-v1.5, Doubao-1.5-pro-AS1-Preview

News

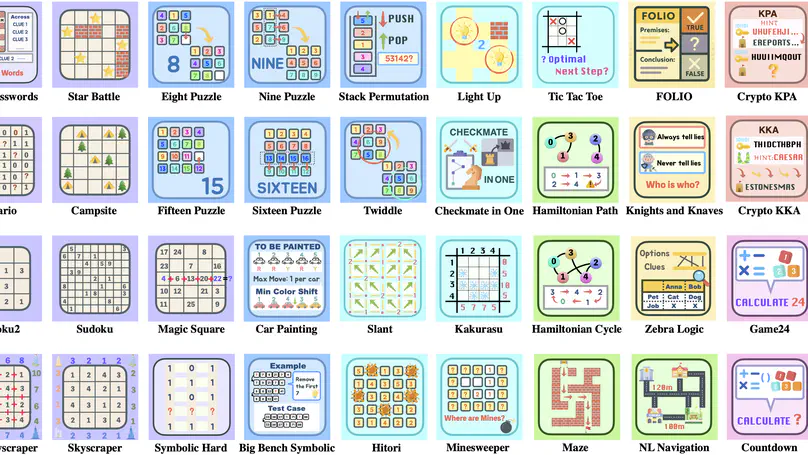

May. 2025 🎉 Checkout Enigmata, the first comprehensive suite tailored for improving LLMs with puzzle reasoning skills.

May. 2025 🎉 Checkout KORGym, A Dynamic Game Platform for LLM Reasoning Evaluation.

May. 2025 🎉 Two papers about how to enhance instruction following have been accepted by ACL 2025 findings! The first paper enhances the soft contraint following ability of LLMs and the second paper investigated the position bias in multi-constraint instruction following.

Apr. 2025 🎉 We introduce Seed1.5-Thinking, capable of reasoning through thinking before responding, resulting in improved performance on a wide range of benchmarks.

Mar. 2025 🎉 Our Instruction Following Benchmark CELLO was used by Hunyuan-Thinker-1-Preview for instruction following evaluation.

Jan. 2025 🎉 Congratulations on our paper Think Thrice Before You Act: Progressive Thought Refinement in Large Language Models accepted by ICLR 2025!

Nov. 2024 👀 Joined ByteDance Seed-LLM-Horizon as a research intern to work on reasoning models.

Aug. 2024 🎉 How to improve LLMs’ ability to follow Complex Instructions? Congratulations on our paper From Complex to Simple: Enhancing Multi-Constraint Complex Instruction Following Ability of Large Language Models got accepted to EMNLP 2024 findings!

May. 2024 👀 Joined StepFun Foundation Model Group as a research intern to work on LLM reasoning research.

May. 2024 🔔 Gave a talk at Alibaba Tongyi Lab, titled: “Complex Instruction Following Ability of Large Language Models”. Thanks for the invitation!

Awards

Featured Publications

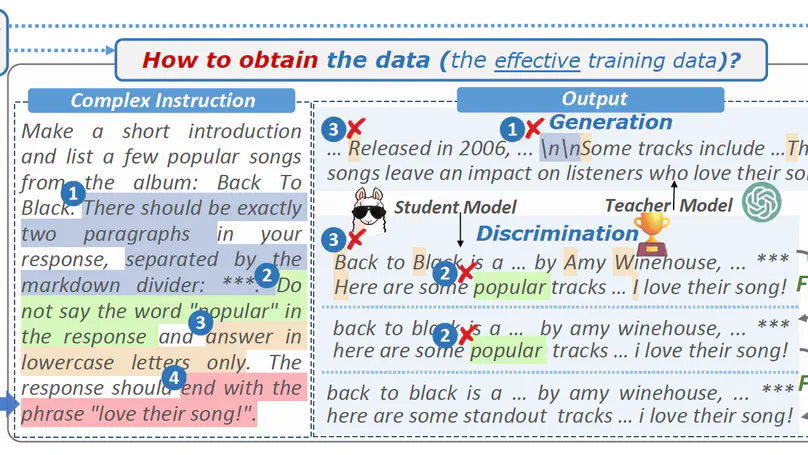

It is imperative for Large language models (LLMs) to follow instructions with elaborate requirements (i.e. Complex Instructions Following). Yet, it remains under-explored how to enhance the ability of LLMs to follow complex instructions with multiple constraints. To bridge the gap, we initially study what training data is effective in enhancing complex constraints following abilities. We found that training LLMs with instructions containing multiple constraints enhances their understanding of complex instructions, especially those with lower complexity levels. The improvement can even generalize to compositions of out-of-domain constraints. Additionally, we further propose methods addressing how to obtain and utilize the effective training data. Finally, we conduct extensive experiments to prove the effectiveness of our methods in terms of overall performance, training efficiency, and generalization abilities under four settings.

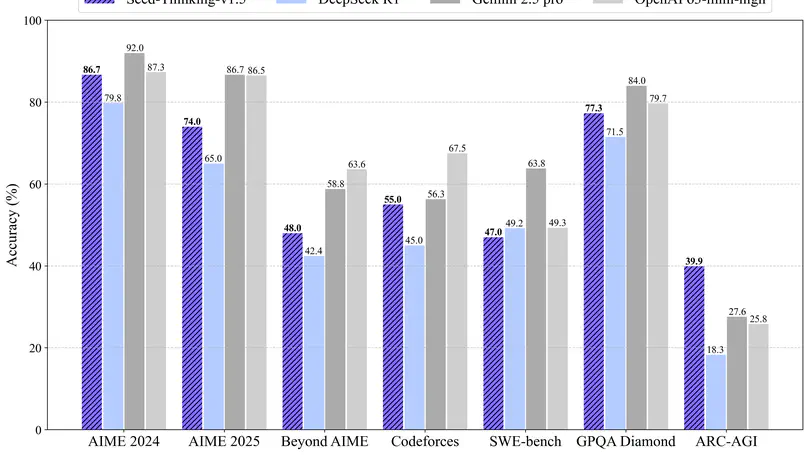

We introduce Seed1.5-Thinking, capable of reasoning through thinking before responding, resulting in improved performance on a wide range of benchmarks. Seed1.5-Thinking achieves 86.7 on AIME 2024, 55.0 on Codeforces and 77.3 on GPQA, demonstrating excellent reasoning abilities in STEM and coding. Beyond reasoning tasks, the method demonstrates notable generalization across diverse domains. For instance, it surpasses DeepSeek R1 by 8% in win rate on non-reasoning tasks, indicating its broader applicability. Compared to other state-of-the-art reasoning models, Seed1.5-Thinking is a Mixture-of-Experts (MoE) model with a relatively small size, featuring 20B activated and 200B total parameters. As part of our effort to assess generalized reasoning, we develop two internal benchmarks, BeyondAIME and Codeforces, both of which will be publicly released to support future research.

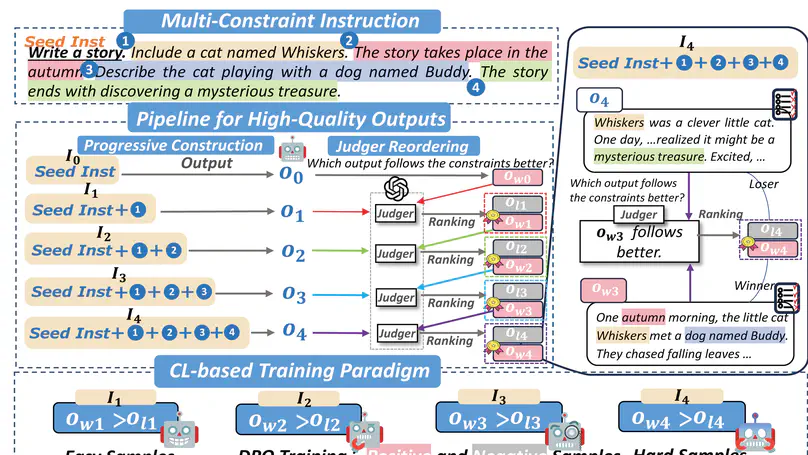

It is crucial for large language models (LLMs) to follow instructions that involve multiple constraints. However, it is an unexplored area to enhance LLMs’ ability to follow soft constraints. To bridge the gap, we initially design a pipeline to construct datasets with high-quality outputs automatically. Additionally, to fully utilize the positive and negative samples generated during the data construction process, we choose Direct Preference Optimization (DPO) as the training method. Furthermore, taking into account the difficulty of soft constraints indicated by the number of constraints, we design a curriculum learning training paradigm based on the constraint quantity. We experimentally evaluate the effectiveness of our methods in improving LLMs’ soft constraint following ability and analyze the factors driving the improvements.

It is imperative for Large language models (LLMs) to follow instructions with elaborate requirements (i.e. Complex Instructions Following). Yet, it remains under-explored how to enhance the ability of LLMs to follow complex instructions with multiple constraints. To bridge the gap, we initially study what training data is effective in enhancing complex constraints following abilities. We found that training LLMs with instructions containing multiple constraints enhances their understanding of complex instructions, especially those with lower complexity levels. The improvement can even generalize to compositions of out-of-domain constraints. Additionally, we further propose methods addressing how to obtain and utilize the effective training data. Finally, we conduct extensive experiments to prove the effectiveness of our methods in terms of overall performance, training efficiency, and generalization abilities under four settings.

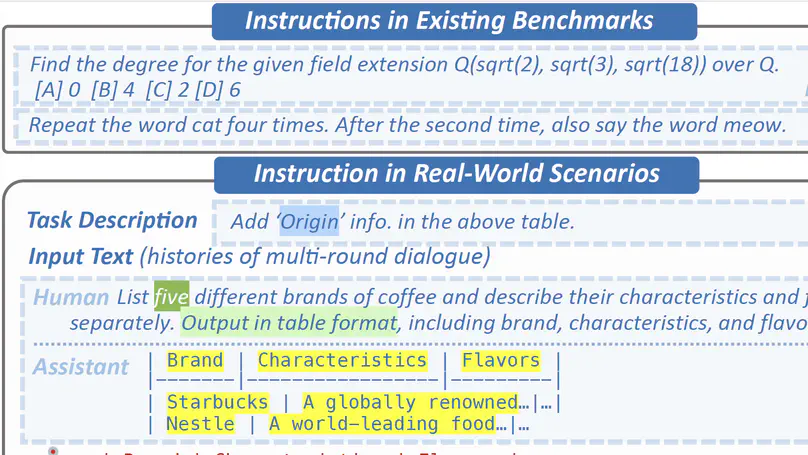

Large language models (LLMs) can understand human instructions, showing their potential for pragmatic applications beyond traditional NLP tasks. However, they still struggle with complex instructions, which can be either complex task descriptions that require multiple tasks and constraints, or complex input that contains long context, noise, heterogeneous information and multi-turn format. Due to these features, LLMs often ignore semantic constraints from task descriptions, generate incorrect formats, violate length or sample count constraints, and be unfaithful to the input text. Existing benchmarks are insufficient to assess LLMs’ ability to understand complex instructions, as they are close-ended and simple. To bridge this gap, we propose \method, a benchmark for evaluating LLMs’ ability to follow complex instructions systematically. We design eight features for complex instructions and construct a comprehensive evaluation dataset from real-world scenarios. We also establish four criteria and develop corresponding metrics, as current ones are inadequate, biased or too strict and coarse-grained. We compare the performance of representative Chinese-oriented and English-oriented models in following complex instructions through extensive experiments.